One thing developers can count on when building a conversational AI is that users will inevitably enter prompts that may be unknown or outside of the AI’s scope. Depending on the chatbot’s intended use, it can be easy to break them by asking odd questions that have very little to do with whatever product or service the brand offers. Based on our experience, chatbots are likely to fail even at the “small talk” level, without reaching any edge case that would justify their struggle.

Although every chatbot team is prepared for the unpleasant moment when the bot can not detect the user intent, it is far from the preferred “happy path”. This results in an user not playing to the design rules existing in the chatbot’s understanding, but could also easily happen if the customer uses terms the chatbot already knows.

But then how can we predict the behaviour of our chatbot when it’s confronted with unexpected user inputs? And how can we improve the outcome of such encounters?

As an advanced bot is used and faced with new unforeseen input by different users, it uses this data to train and become even more accurate over time. We can wait for users to come up with meaningful unexpected intents, or teach them ourselves.

Botium AI-powered Data Generator

To tackle this pain head-on, we have introduced a new feature to Botium. Our solution, the AI-powered Data Generator, integrates GPT3 by OpenAI to create relevant user examples for your organization’s chatbot. GPT3 is an advanced AI system that generates natural language.

Once you click on the Test Data Wizard item, you can reach the dashboard, where you are asked to fill out two important fields:

Chatbot Domain: Chatbots in specific domains are focused on particular topics. They have access to the knowledge within these domains in order to converse intelligently. Some examples of domains include: healthcare, education, business, human resource etc. Botium also offers a variety of predefined categories to simplify this choice.

Description of the Chatbot: The description is narrow and applicable to the concern and task of the organisation the chatbot serves. Example: ‘A banking chatbot for money transfer and account balance.’

By providing this data, the AI-assisted test generator will generate the most likely user inputs that are relevant to the chatbot’s field.

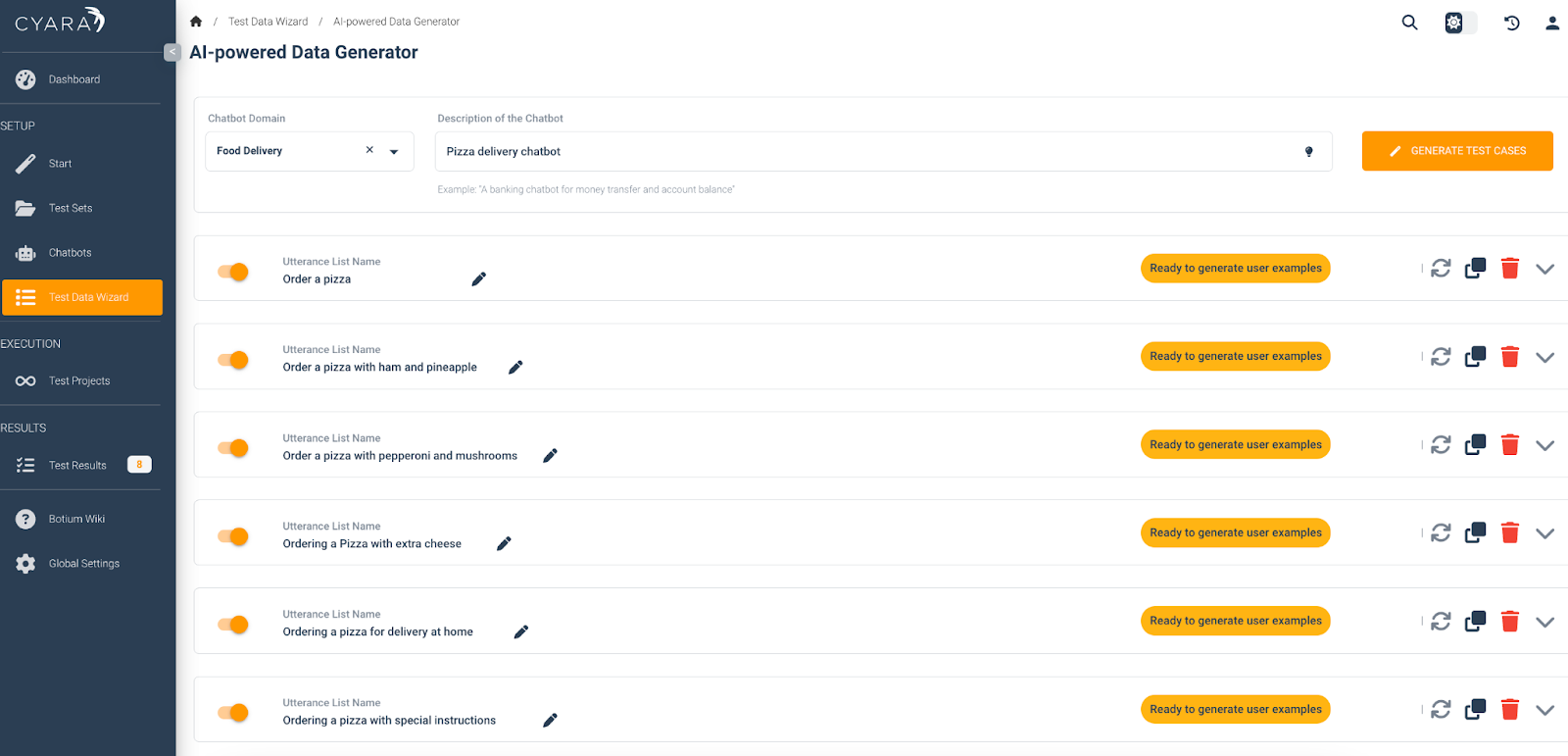

To demonstrate how this works, I have chosen the Chatbot Domain “Food Delivery” and the description is “Pizza ordering chatbot” and clicked on the magic wand to generate test cases.

The AI-assisted Test Generator produced seven suggested topics the chatbot should be able to handle based on its domain and description, such as cancel order, order pizza with toppings, or order a pizza with extra cheese-who wouldn’t want that?!

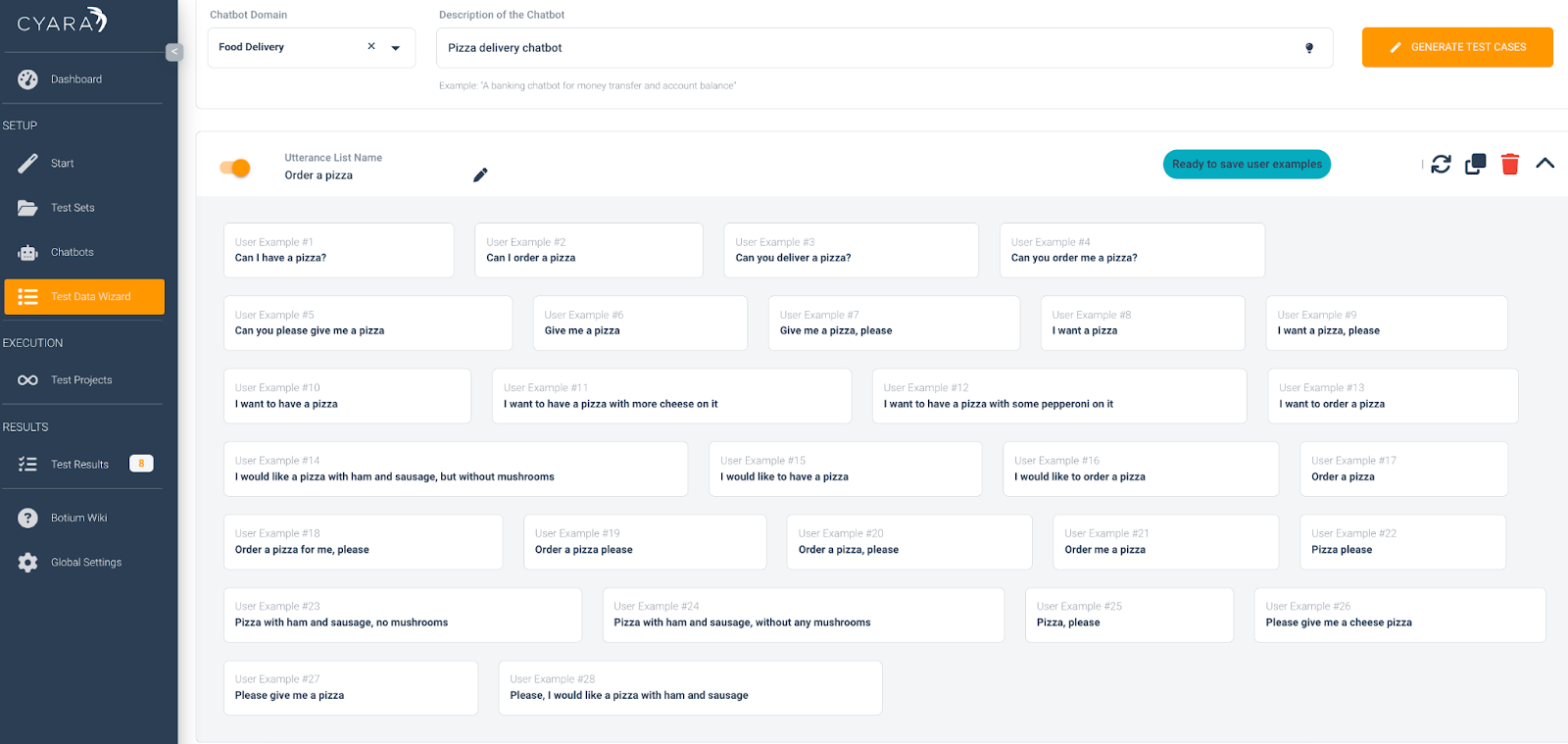

Once satisfied with the generated topics, we can then “generate scripts” to create hundreds of user examples the chatbot will be most likely to face in production.

If you are not completely satisfied with the outcome, you can ask Botium to regenerate the lists with the circle arrows icon.

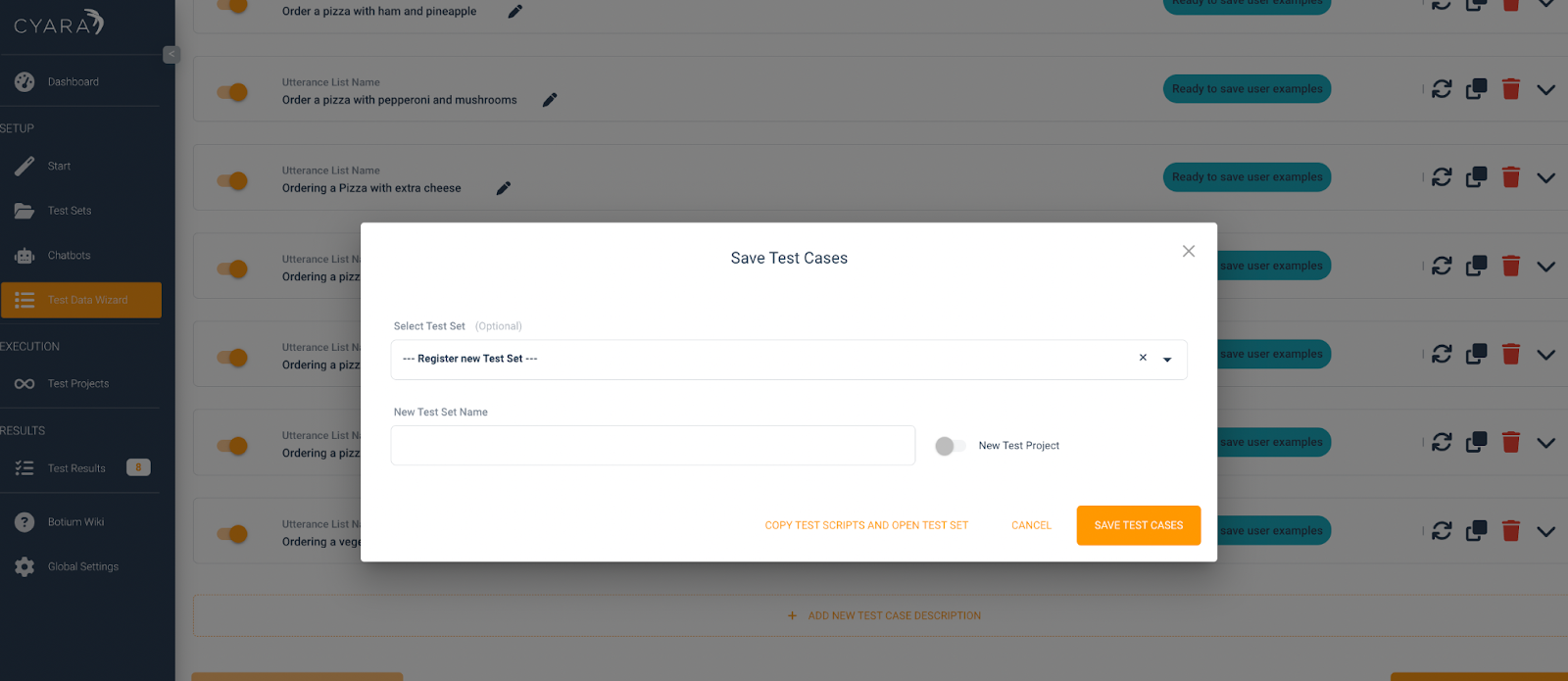

Once you’re done with the configuration and you’ve selected the examples that seem most useful to you, all you have to do is save the scripts and decide whether you add them to an existing test set or start fresh with a new test project. Now you can run AI generated tests at any time.

When is it Applicable?

The AI-assisted test cases can be used for several different purposes and can improve your chatbot in many different ways.

1. Ease your first steps

One of the biggest challenges in developing a chatbot is how to clarify the topics the chatbot should be able to handle. At this stage, real user input is not often known so the expectations of the chatbot team may not be aligned with the reality of how the chatbot will be used.

There are several best practices to detect the hottest requests, but the most common is to analyse incoming questions your customer service team handles daily, weigh them according to their importance and create different groups of topics based on this analysis in order to assure the chatbot can handle incoming user input.

Our AI-assisted Test Generator gives organisations the simplicity of generating meaningful user examples that are most likely to come up in their chosen domains.

2. Increase test coverage

Test coverage is a very common and important indicator in software testing in terms of quality and effectiveness. Although it is important to keep in mind that in chatbot testing it is impossible to reach full coverage, because users can essentially say anything to your bot and therefore the test sets become infinitely large.

Despite the fact that the term “coverage” is not the most meaningful metric to express the quality of the chatbot test set, the efforts to increase test coverage will help to make your bot more robust and error-free.

In case you save the AI-generated topic as a new intent, you improve your chatbot understanding, while adding them to one of the existing intents will increase your coverage and enrich the conversation without failure.

The generated user examples can also support you in identifying meaningless test cases that do not increase coverage.

3. Test your chatbot for unexpected user inputs

It goes without saying that people don’t express themselves with the same words, however the chatbot needs to understand what the intent is behind an unfamiliar sentence.

Intents allow your chatbot to understand what the user wants it to do. An intent categorises typical user requests by the tasks and actions that your bot performs. Our Pizza ordering chatbot intent, for example, labels a direct request “I want to order a pizza”, along with another that implies an action: “I feel like eating a pizza”. Although they are expressed differently, the request behind the two user examples is the same.

With the Botium AI-assisted test generator, you can better enable your chatbot to understand these unexpected user inputs. Ready to put this to the test? We’re here to help! Contact Cyara today to learn more from our Botium experts.